Blog

Using AI (Computer User Agent) models in automated testing for easier and quicker tests

Using AI (Computer User Agent) models in automated testing for easier and quicker tests

One of Scriptide's staff software engineers, Botond Kovács, made a proof of concept (POC) TypeScript framework that uses OpenAI's Computer User Agent combined with traditional end-to-end testing frameworks like Playwright/Puppeteer. This POC allows for writing automated software tests more easily and quickly than before, treating the testable application as a black box instead of having to rely on test IDs, eliminating the need for code changes in the original application.

Written by

Botond Kovács

Last updated

NOV 10, 2025

Topics

#dev

Length

6 min read

AI-based End-To-End Test Automation

In this article, we explore how Computer User Agents (CUAs) can be utilized for test automation. This new technology allows us to write tests with minimal programming knowledge and no modifications to the application under test.

The conventional approach to end-to-end testing usually involves modifying the code of the application itself to include so-called test IDs. Using these test IDs, we can identify elements on the page under test and perform actions on them.

AI technology, however, allows us to interact with the application just like a human would — by looking at the screen and deciding what to do next. This opens up new possibilities for test automation.

We have also experimented with creating test cases in non-English languages, such as Japanese and Hungarian. At the end of the article, we also share the limitations of this approach that we have discovered.

Quick Demonstration

Before diving into the details, let's see a quick demo of how this works in practice.

Meet The Model

Earlier this year, OpenAI released the computer-use-preview model, which also powers OpenAI's product called Operator and ChatGPT's new Agent Mode. This model is designed to interact with graphical user interfaces.

Although the products built on top of this model were intended for automating tasks such as grocery shopping, we had a different idea: what if we used this model to automate testing?

Computer User Agents look at screenshots and decide what input actions to perform. For example, a model can click on something on the screen, scroll the page, or type using the keyboard.

Our goal was to turn this model into an AI Tester, which can perform testing of the application just like a human tester would.

To explore this idea, we first sketched out what test cases should look like. We decided that test cases executed by the AI Tester should be as close to manual test cases as possible. This is not traditional end-to-end test automation, but something new: the automation of the execution of test plans.

Writing Test Cases

To perform test automation this way, we first had to figure out how to write the test cases. We sketched out how an example test case should look — this allowed us to understand the API we wanted to build on top of the model. We came up with this:

Although this is code, it is very high level and does not require any technical knowledge of the underlying application. The test case reads like a manual test case and can be written by anyone who understands how the user interface of the application looks.

clickOn(description): instructs the tester to click on something on the screen, identified by the given description.fillIn(fields): instructs the tester to fill in the given fields, for example on a form.expectOnScreen(description): instructs the tester to verify that something is true about what is currently visible on the screen.

If we had these functions, we could describe virtually any test case for a UI application!

TypeScript and JavaScript support Unicode-encoded function and variable names, meaning we can create test case code in any language! For example, here is the same test case in Japanese:

And here it is in Hungarian:

Naturally, we proceeded by implementing these functions. Let's take a look at how clickOn works under the hood.

Making The AI Click

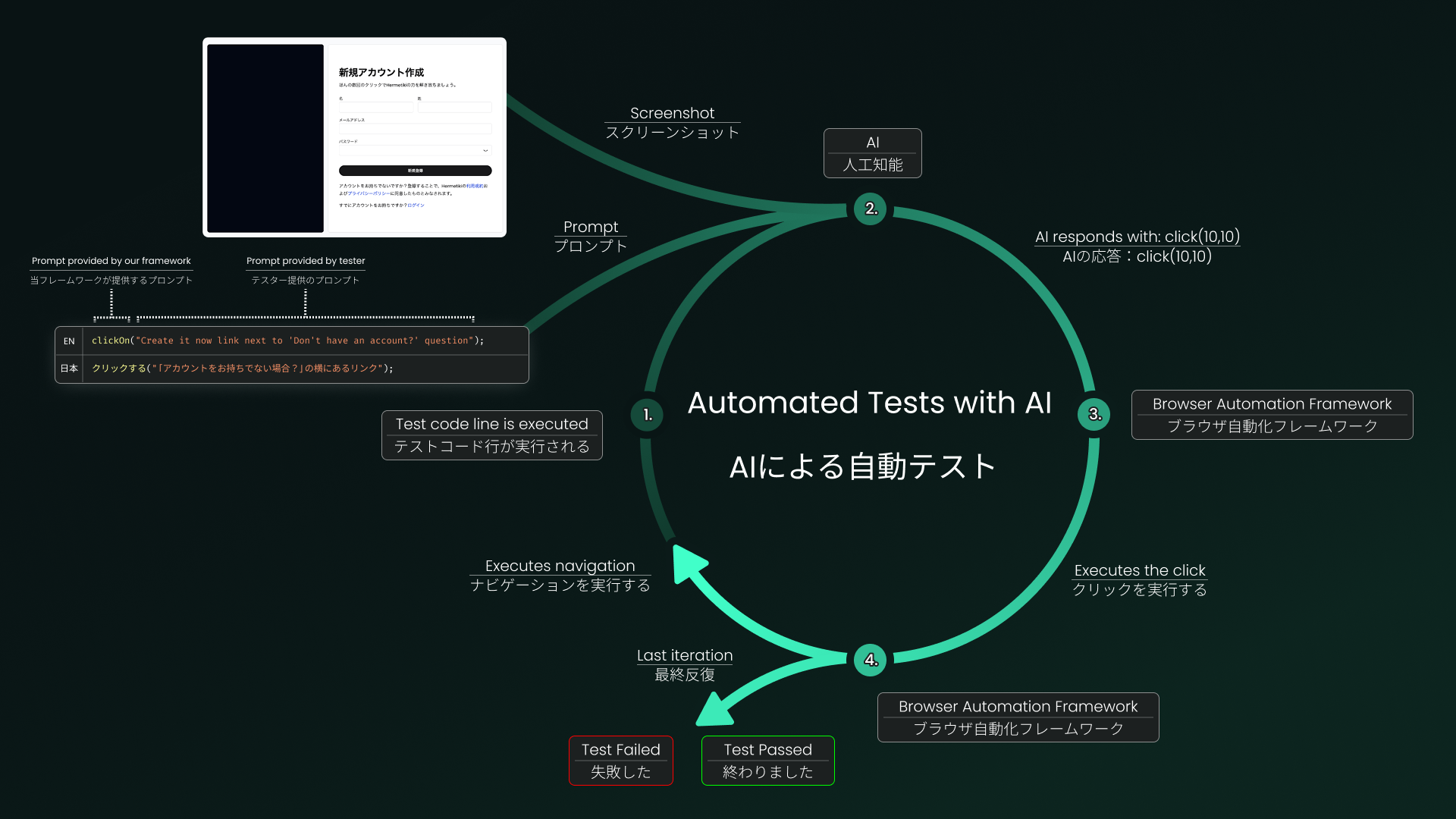

OpenAI recommends using the computer-use-preview model, by implementing a so-called Computer User Agent Loop.

In each iteration of this loop, we:

- Take a screenshot of the current state of the application

- Send the screenshot to the model, along with any other messages

- The model decides to either ...

- Use the computer to perform some input action (e.g. clicking on coordinates, typing text, etc.)

- Respond with a message, indicating that it has completed the task, or cannot continue on its own

- We perform the input actions of the model, and wait a little so all inputs are processed

- We repeat the loop until the model responds with a message

This is exactly how Operator and ChatGPT Agent Mode work.

We implemented this loop, along with a strict system prompt instructing the model to only perform a single, simple step, and do nothing else. The instructions must also be clear about what to do in edge-cases, such as when the step cannot be completed.

This is the exact final system prompt we came up with:

We decided to include edge-case handling instructions in the prompt of the specific actions. For example, here is the prompt we used for the clickOn action:

... where {{targetDescription}} is replaced with the actual description of the element to click on, for example "The sign up button".

How It Works

Making It Useful

After a few rounds of thinking and proof-of-concepts, we landed on choosing mocha as the test runner, and puppeteer as the browser automation library. We built a small framework around these tools, which provides the high-level API we wanted.

After that, we can just start creating test cases, by creating .test.js files.

To make them run, all we have to do is run the following command:

It is important to set a high timeout, as each step can take a while to complete. The model is highly capable, but also slow at times.

Translating To Other Languages

We perform a lot of multi-lingual user interface testing. From the perspective of the test framework, this meant two things for us:

- We want to write tests once, but have them work on any language understood by the AI.

- We want to write tests in either our language or the language of the customer to communicate requirements of the system behavior precisely.

For the first problem, we introduced the generateDataField function. This function:

- Checks the language of the page, using the

langattribute of the HTML document - Instructs the AI to generate data for a field, provided a description, but regardless of the language of the description, it must generate it in the language of the page.

Solving the second problem wasn't an issue either, thanks to TypeScript and JavaScript's Unicode support. We created the aliases.ja.ts and aliases.hu.ts files, which basically do this:

We export an alias for each function we have in our framework. This way, we can write test cases in any language we want!

Limitations

It is important to share some limitations of this approach. Since we rely on AI technology, it can never be 100% perfect — although we expect these limitations to decrease as AI model quality improves. We also built this proof-of-concept on top of a research preview model, which is not fully ready for production.

- The computer-use-preview model sometimes struggles to understand non-English interfaces. We tested it extensively with Japanese interfaces, and while it often works, its unreliability creates false positives and false negatives that must be manually reviewed.

- The model does not support structured outputs yet, and sometimes fails to follow response format instructions. Deciding whether a step has passed or failed can be difficult. We mitigated this by using a second model to review CUA outputs, adding cost and complexity.

- The model works best for larger interface elements, but struggles with actions requiring pixel-perfect precision. It fails notably with small icon-only buttons in video games.

- Due to latency, certain real-world behavior cannot be reliably tested. For example, auto-disappearing toast messages are sometimes missed, as they vanish before the model can react.

Future Work

We plan on using this technique for quickly creating test cases for our own products, and customer projects. Another use-case is for creating application data, without using APIs or database snapshots for this - we just simply describe the steps to take to make the system go into the desired state.

Another idea to explore is to record the steps taken by the model, and re-use them later as conventional end-to-end tests. This would allow us to quickly create end-to-end tests for applications, and then run them without invoking the CUA model every time. The downside of this approach is that the recorded tests would be brittle (using hardcoded IDs or selectors), and require constant maintenance, just like regular end-to-end tests.

We are also in the process of exploring techniques to make this approach more reliable, and cost-effective.

- One technique we are currently experimenting with, is to use a non-CUA model with understanding of the DOM-under-test before invoking the CUA. If the model cannot perform the step (for example because test IDs are missing), we can invoke the CUA as a fallback. This reduces the number of CUA invocations, and thus the cost and time of running the tests.

- We are also experimenting with multiple techniques to more reliably detect false-positives and false-negatives. Other AI models can be used to review the evidence collected during the CUA loop (screenshots, browser logs, actions, etc.), and decide whether the original conclusion of the CUA was correct or not.

- Another way of reducing the cost of the CUA loop is to implement a better conversation state management. Realistically, we only need to keep the last few rounds, and discard earlier messages. This would limit the context size required for any step, and thus reduce the token count.

Interested In This POC?

If you are interested in this POC, feel free to reach out to us. We would be happy to show the solution and test it for your use case.

Scriptide is a highly skilled software development company that specializes in custom, complex B2B software solutions. We offer a wide range of services, including digital transformation, web and mobile development, AI, blockchain, and more.

Get a free IT consultation. We are excited to hear from you.

You might also like these articles!

Click for details

Improve Lighthouse Performance: How To Maximize a Website's SEO Scores

Google Lighthouse and Google PageSpeed Insights have become industry standard tools for measuring the overall performance of web applications. But what purpose do these tools serve, and why should we pay attention to our score? Can we improve our audit results, or is it set in stone? In the next article, we will explore how we managed to improve the score of Scriptide's website and why we chose to take this step.

#dev

•

JUL 01, 2025

•

4 min read

Click for details

Benefits of Full-Stack Development

Full-stack development is becoming increasingly popular among tech companies—but why? How is it better than the traditional, well-established backend-frontend separation? In this article, we’ll explore the benefits it offers compared to a divided backend/frontend model. This is the first part of a two-piece series. In the next article, we’ll explore how type safety and code sharing between the backend and frontend can improve code quality, enhance developer experience, and accelerate development and delivery.

#dev

•

JUN 04, 2025

•

3 min read